Linux Audio: The modular experience

How it works outside theory

9 June, 02010

In another article I have talked about limitations of modular approach, limitations which were derived more from theoretical analysis than actual work. Now, having spent several weekends doing music in a modular environment, I am ready to share that experience.

It would be important to understand that I am doing electronic music, which is basically programming a complicated arrangement as opposed to playing things live.

General thoughts

My general impression from the process is positive and the workflow I ended up with is almost fluent, with a few minor things most of which are easy to address and are mostly details of a GUI. As it happens often in the world of GNU/Linux, you learn to expect problems but everything turns out to be not so bad after all. At the same time, as will be shown later, there are technical concerns that I currently have with the modular approach in terms of system resources.

I would also like to address the main argument of my modular approach criticism. I theorized that any modular system's principal weakness is that it depends on many developers in order for the system to be stable and support the necessary standards while an IME (Integrated Music Environment) can be perfected by one group of developers who have it under their full control. While I still believe this to be true as far as the general statement goes, I have also observed an effect of software maturity, meaning that there is a point of maturity which a piece of software reaches after which it is stable enough and its workflow is tested enough for it to perform fine for most of the time. So in perspective most actively developed software will reach this point and thus a Linux Audio modular system can enjoy a good level of stability and usability. Of course, you always have those obscure but sometimes really cool programms which nobody develops anymore and which have issues. This will be discussed later in the "Standalone vs Plugin" section.

JACK Transport

Starting from the most important thing, a synchronizing transport system is definitely a must for any modular studio - otherwise it is virtually unusable for making music which has to be within a precise tempo grid. Fortunately, JACK Transport actually delivers what it promises. When I was writing the above-mentioned article, I did not actually try to use Transport, but now that I have - it is a solid, state of the art system, supported by at least three major sequencers - Qtractor, Ardour and Hydrogen, which is already enough for any music composing. At one point I did experience a weird situation when positions of the playhead in Qtractor and Hydrogen wouldn't match up if you start playback from the middle of the tune, but could not reproduce the bug ever since.

Looping in JACK Transport is said to not be implemented yet. I don't know what kind of looping is meant, but I have no problem looping a part of the tune using Qtractor. It not only loops the tune within Qtractor, it also loops Hydrogen along with no issues. Perhaps, this is not sample precise looping, but it is seamless to the ear and is enough to at least play stuff over while you search for good sounds. If one tries to record something to a non-precise loop, he will end up with a track that will sooner or later go off-beat. I have not tested this, so it still remains a question whether this loop mode is okay to be used as a backing template for improvisations that you later want to add to the tune.

Modular setup

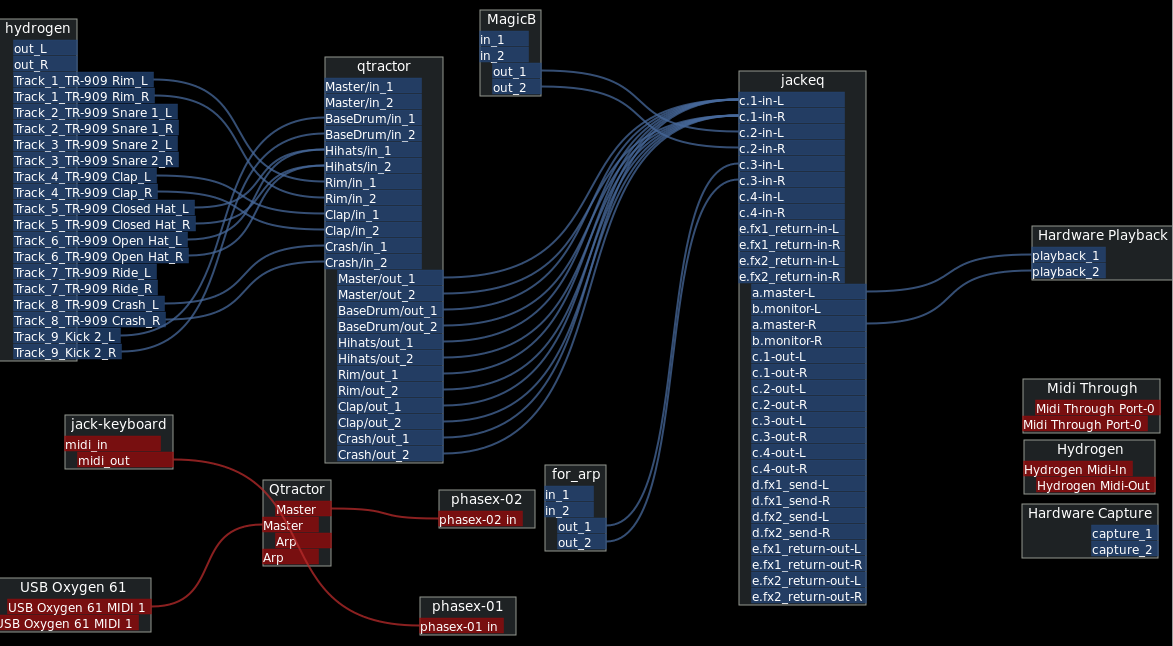

I will now guide you through my setup. It might not be perfect, I am still working on improving it, but the general idea should be clear.

Let's see what we have.

First of all, we will get nowhere without a session handler. I am using Ladish. Reasons why using Ladish is a good idea are explained here. Having setup a project in Ladish, we can now save all our work and reopen the whole thing later.

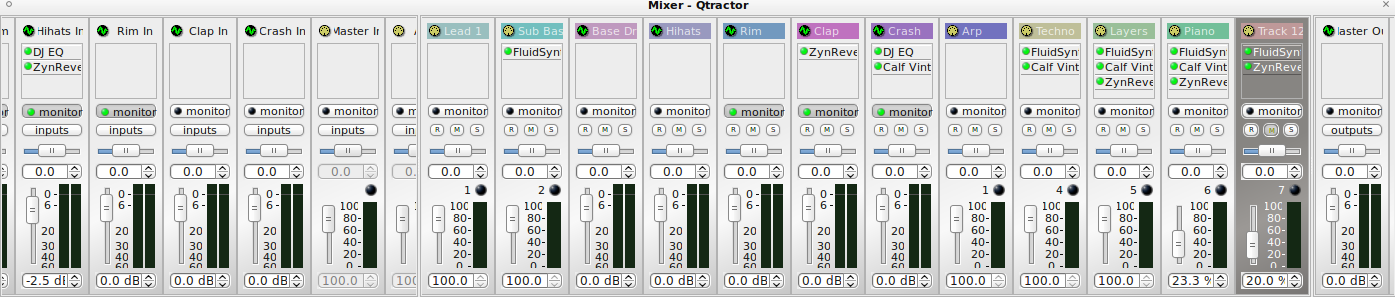

The main component here is Qtractor. It is sequencing midi and additionally you can see that Hydrogen tracks are channeled into Qtractor as well. This is done for the reason that Hydrogen has issues with effects, namely if you apply a delay effect to an instrument, it will apply the effect ignoring its volume envelope (whether same applies to instrument filter I did not check).So if you have, say, made a very tight hihat by editing its volume envelope, the delayed signal would be as though the volume envelope settings are default. Sometimes it delivers interesting results, but for most of the time you don't want it. Seems that volume envelope is missing somewhere in the Signal->FX->Audio chain, but I am not the one to know. Version from git and latest stable version both show same problem. Also, I found that when loading a Hydrogen song, some of the effects do not load correctly and while GUI shows same settings, the sound result is somewhat different. To combat these issues I have channeled everything into Qtractor mixer and just decided to host effects in one place, so that there will be no chance for inconsistencies.

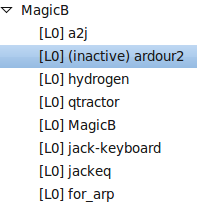

You can also see "MagicB" and "for_arp" applications. Those are actually two Phasex synths. Their names correspond to preset names they use. This is done for the reason that Ladish does not yet have the ability to edit the command line while the app is opened. But you can edit the name of the app. So giving an app the name of the preset used allows you later to stop the app and remember what to add to the command line parameter. (This is being worked on and in future versions of Ladish it will be possible to easily add such things without the need to restart anything.) Another reason to give a name is to avoid things like "phasex-01", "phasex-02" stuff, which only makes it confusing.

Since we've touched upon the Phasex synth, you might be wondering why do I need JACK Keyboard there. This is yet another workaround. Phasex has a known problem of not stopping its sound if it has not been explicitly sent a note off signal. What it means is if you stopped playback in the middle of the note in Qtractor, Phasex will continue to play this note. So very often you try to stop Qtractor only when you are sure that it is not in the middle of playing Phasex midi notes in piano roll. If you did not manage, the solution is to use a midi keyboard to press that key again, sending the note off signal.In this exact tune phasex-01 plays a quick arpeggio and so stopping Qtractor not in the middle of a note is impossible. My midi keyboard is playing another Phasex and I am using JACK Keyboard to stop trailing notes when they occur. Nasty bug actually, hope it gets fixed soon. I did report it, but have yet received no response from the developer.

Moving on, we take all the audio and channel it into JACK EQ. This is an important step since it allows you to easily work with the overall output and have just one Audio Out to work with rather than get confused with all those virtual wire mess (what do you know - even virtual wires get messy quickly). I have channeled Phasex synths into JACK EQ as well, though this is not the best solution and I think I will get a habit of channeling all external synths into Qtractor instead, so that just like with Hydrogen I have everything inside one mixer.

Another thing that would be good to do is to be able to have only Master Out from Qtractor instead of all those individual wires. Qtractor does allow to specify the inputs and outputs of the bus, but when I chose Master Out for all those Hydrogen inputs, there was no sound. Ideally we would mix everything in Qtractor and just get the resulting Master Out. That would effectively eliminate the need for an additional abstraction layer in form of JACK EQ. I might be doing something wrong here so if I figure it out, I will update this paragraph.

Anyway, another handy feature of Ladish is the ability to stop applications yet leaving them in the app list of the project, so that you can fire up the app when it is needed. On the image below you can see that I actually have Ardour in the project. Ardour I fire up when I want to record everything. When started, it would load the necessary project and then I can roll.

Last app I did not mention is a2j. It comes with Ladish and is a bridge between alsa midi and jack midi - very important utility. In fact, I cannot go without it anymore. Should be started up with any Ladish project by default (this is planned).

So as we can see, the setup is not too complex. The basic idea is to get everything into one mixer and then have overall output on the other end. Thanks to JACK and its audio routing, all of this is possible.

The workflow is actually quite fluent - Qtractor should be in Full Transport mode, meaning that it can be both a Master and a Slave - and so no matter where you start the tune from - Hydrogen or Ardour or Qtractor - you get the same result. I did experience a little glitch in Qtractor - if it is being started from Hydrogen, first half of second is inaudible. This happens ONLY in the immediate beginning of the track, no such issues when working in the middle of a tune. So basically what this means is that if you want to record the tune, just start it from Qtractor and you will be okay. Other than that - its great. In fact, having a drum module away from the midi sequencer is kinda cool and makes you feel sophisticated. No irony here, I really mean it. Kinda reminds me of early 90s when they had hardware modules linked together and they had to sync them up.

At the same time you do not have to use Hydrogen for drums if you don't want to. There are plenty high quality soundfonts with drums and you can use those. The drawback presently would only be that you will have no volume envelope as soundfont players on Linux do not yet have that functionality. Contact your local plugin developer to fix this.

Creating a template

Once the modules are set up, everything feels a lot like an IME. We will talk about some of the issues later on, but in general audio/midi routing and JACK Transport do their job well. Setting all of this up, however, is time consuming and takes away the playful element when you just want to fire up your sequencer and jam. A lot of creative ideas start from playful experiments. In order to facilitate that, a starter template can be created.

It should basically consist of apps you use, connected to each other. In my case I have Hydrogen channeled into Qtractor's mixer and Qtractor itself with a starter project open. The Qtractor starter project has several midi channels with empty fluidsynth plugins loaded, so that I don't have to click around menus. Saved as a Ladish project, I would load that and be ready to instantly fool around. There should just be a copy of the template lying around somewhere in case you accidentally overwrite something, in your joy. Which leads us to the first problem we typically run into with a modular setup.

Problem 1: Naming

Starting off with a minor problem, naming is more a matter of habit. When you have many apps open and you like what you've made, you now have to save everything. It is reasonable to give everything the same name. So if your track is called "Sunset", it would be prudent to have Hydrogen, Qtractor and Ladish projects all have the same filename. The fact that you have to save everything separately, I think, will never go away, since there will always be a program which has no Level 3 Ladish support. Not that its too difficult. Saving operations are automatic and we don't even notice them for most of the time. It is a problem only in the start, when you made something cool and want to save it to disc. So you have to "Save As" Hydrogen song, then "Save As" Qtractor song, possibly something else.

Another naming aspect is channel naming. In the example above you can see that I had to name mixer channels in Qtractor with meaningful names so that I have no problem connecting Hydrogen to Qtractor and easily understand what goes where. Again, a matter of habit and perhaps a good habit too.

Still (I dream), wouldn't it be nice if it worked the other way? Like in flowcanvas (Ladish has flowcanvas built in, like in Patchage) you drag wires from Open Hat into Qtractor box and that automagically creates an audio input with the same name as in Hydrogen without the need to go inside Qtractor to create a track, put its name and create a new input. I wonder if it is technically feasible.

All in all, flowcanvas should be developed more actively. It is an important piece of software and needs lots of improvements. Like, have a key shortcut that would toggle the separate L and R channels into one wire, so that I don't have to drag two wires each time. Why would anyone want to channel L into one place and R into another? Very rarely, I argue.

But flowcanvas is a separate article. A book, even.

Problem 2: Key shortcuts

Thing is, every program has its own key shortcuts and key combinations and not always they are unified. Most of the time you'd be struggling with operations like copy/paste, selecting and deselecting stuff, dragging clips around. What works one way in Ardour, works quite differently in Qtractor and differently in Hydrogen. Qtractor, in my opinion, is less intuitive, as traditional combinations do not always work. In piano roll, after having worked with other piano rolls, you would think that holding Ctrl and selecting notes would add notes to the selection, but in order to do that you have to hold Shift - holding Ctrl will actually reverse selection mode and make selected notes unselected and unselected - selected.

But the shortcuts to bring the mixer up really steal the show. This is a big one. I mean - all three programs have different shortcuts. Out of the three Qtractor is more unified, as it uses F9. I've seen this be used in LMMS and FL Studio as well, so for me personally this is much more convenient. I mean, at that point I just know that F9 is a mixer.

Of course, you do get used to that. But I still think that software which is intended to be used as modules of a bigger system should somehow unify shortcuts, at least for such basic operations like bringing up a mixer and selecting stuff.

Problem 3: Freezing

Freezing tracks is important. If you have lots of things playing and yet you know that this theme is more or less complete and you can render it - why not go for it? But I have yet to figure out an elegant way to do this within the scheme described above. Rui, the author of Qtractor, strongly discourages opening several instances of Qtractor, quote: "Weird things can happen".And indeed they do - writing from Qtractor to Qtractor would result in an audio file which is strangely offbeat.

What then? Ardour? Perhaps. Maybe someone can come up with a better way. I wish it was possible to freeze a track from within a sequencer, but it doesn't seem to be technically possible and/or easy to implement. You can create a loop from within Qtractor, by channeling the plugin output to a new Audio track, but not only that, honestly speaking, sucks as a method, it also creates an unavoidable latency. With 128 frames unnoticeable, but still.

Which brings us to the question of resources.

Problem 4: Resources

Below you can see Qtractor mixer from the project we discussed in this article. So, apart from two Phasexes and Hydrogen, there are 5 fluidsynths loaded, some effects as well. Not too much, actually. I've already had LMMS projects which were more heavy on both - number of instruments and effects. And it managed not to lag. And it managed it without running low latency audio server and kernel. Yet at this point, with the addition of a 5th soundfont, my project started to glitch.

I am not a coder, but knowing the basics of how computers work, I have a strong suspicion that opening several instances of a plugin is not the same as opening several instances of a standalone application. And I am wondering whether modular setup would commonly take up more RAM and CPU. In this exact case it maybe the good old DSSI Fluidsynth plugin which is the source of the problem, but what if those Phasex synths were LV2 plugins? What if Hydrogen was an LV2 module? I really need some experienced coders to clear up this question once and for all.

Which leads to the next point:

Problem 5: Standalone vs Plugin

Even if the question of resources is irrelevant - say, it doesn't matter whether an app is standalone or a plugin, it takes same amount of RAM and CPU - a plugin would still be preferable.

If we look at the problem which we ran into with Phasex, we will see that if it was a plugin, the problem would less likely be there. A plugin API would probably have a generalized way of dealing with notes off. And even if not, a "stop" signal from a host sequencer should be understood by a plugin and cease all playing notes.

Additionally, we would have to deal with less wires and boxes in Patchage. It is fun, to an extent, but if you need many synths, your attitude might change and from being fun it becomes confusing.

But most importantly, all plugins have save states and it means there would be no need to save presets and name apps and edit command lines in Ladish. Because no matter how well it would be realized in Ladish, what we really want is to just press "Save" and with that simple action store the whole project, with all of its presets and parameters and connections, into memory.

Plugins are like that.

Go for plugins.

Problem 6: Automation

And finally - the biggest concern of all - automation. That means recording knobs or at least editing external synths parameters from within a sequencer in non-real time (yet another killer argument in favor of plugins which should be easier to automate, because they are inside a sequencer - no need to come up with voodoo magic).

This I don't know how to do at all in a modular situation. Qtractor's parameter automation is not there yet - the one that is in the piano roll is not smooth, it is more like impulses, so almost unusable if you want to automate stuff like filters. Also, pretty difficult to set up. I could not find out which MIDI signal controls which parameter in an external synthesizer and there was no way to do a MIDI learn from within Qtractor.

Automation in Ardour is pretty good. Is there a way to somehow attach parameters in Ardour to external synths, like Phasex? So far, I found no way to do it. If I do, this paragraph will be updated.